Data Science Corner

The Bias-Variance Tradeoff

Written by Justin Groves, PhD

One of the main hurdles for any data scientist is bias. When making any kind of predictive model a variety of different biases can show up. You may be familiar with selection and confirmation bias which affect data collection. But today, I’d like to talk about a different bias that comes into play during model development. The Bias-Variance Tradeoff can ruin a predictive model if not taken into consideration.

To understand bias-variance tradeoff, we need to understand what a predictive model does. At the end of the day, a predictive model takes in data it knows something about and then tries to predict something about data that it doesn’t yet know about. For example, when scrolling on a streaming service like Netflix, you’ve probably noticed a percentage on some movies predicting how likely you are to like that movie. This is a predictive model: Netflix takes in information about movies it knows you have seen and liked and tries to predict what movies you’ll like that you haven’t seen. Netflix knows that I watched all eleven seasons of The Great British Baking Show, so maybe I’ll like to watch Iron Chef, too. The subtlety is that Netflix doesn’t know whether I like Iron Chef until I try watching it, but they’re predicting that I might.

The catch is that the predictive model can be wrong. And if it’s wrong a lot, then it’s not a good model. If Netflix recommends 10 movies and I only like 2 of them, that’s not a good model. In that case, Netflix would want to find a way to improve the model to make better predictions. One of the ways it could do so is by using the bias-variance tradeoff. This bias is different than the selection and confirmation bias from before.

Let’s keep using our Netflix analogy to understand what this bias is. Suppose I watch a bunch of movies and give them either a thumbs up or thumbs down afterwards. Netflix will take that data and create a model that specifically predicts whether I will like a movie I haven’t seen before. (How exactly? That’s proprietary! 😉) In theory, the movies that I watched (and liked) will cause the model to learn something about me to then make a prediction. But there’s a catch! If the model isn’t complex enough, it can end up ignoring the movies I liked and result in a bad model. For example, if the model only learns from feedback given on movies in the Western genre and I didn’t watch any Westerns, it won’t be able to learn from my movies and will completely fail to predict my love of romantic comedies set in New York like You’ve Got Mail.

This specific kind of error is just called bias (or error bias) and is based entirely on a model’s ability to learn from the input (or training) data. That ability is dependent not on how good the input data is, but instead the model’s ability to learn from that input data. If a model doesn’t learn from the input, then it will make bad predictions and be a bad model. So how do we reduce error from this bias?

Typically, this bias comes up because a model is lacking in complexity. If a movie prediction model bases everything off just Westerns, then it’s not very complex. So, we need to expand our model to something more complex that will incorporate genres outside of Westerns. What this does is add an element of variance to the model, because now the model will be able to vary predictions across multiple genres.

However, there must be some balance and we can make a model vary too much. With the Netflix example, one could end up adding too many different genres to the model. There are over a hundred different genres and subgenres that could be used. Adding too many of them will result in a model that is too varied. For example, adding in a subgenre of “Road Buddy Sci-Fi Western Disaster Film” is too specific. Unless someone specifically watches a lot of “Road Buddy Sci-Fi Western Disaster Films”, the model won’t be able to learn if someone likes that genre or not – the grouping is simply too specific to be useful.

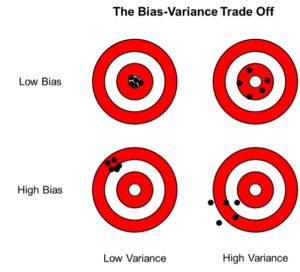

This is the heart of the bias-variance tradeoff. Make your model too simple, and it will be biased and not able to learn from the input data. Make your model too complex and varied, then your model won’t be able to make reliable predictions at all. We can visualize the tradeoff nicely with this graphic of some targets:

Typically, one wants a model that has low bias, and low variance. This helps avoid two common model errors: underfitting (high bias and low variance) and overfitting (low bias and high variance). This is much easier said than done! Finding that sweet spot is often model dependent and sometimes described as more of an art than a hard science.

A nice way to do this in our Netflix model would be to expand out to the lowest number of genres that still cover most of the genres one would want. According to MasterClass, we can do this with about 13 genres. That will help us not to be biased towards Westerns but also vary among the other core genres.

The bias-variance tradeoff is a familiar phrase to any data scientist, but now it can be a familiar phrase to you, too! Next time you hear someone talk about AI or a predictive machine learning model, think about the bias-variance tradeoff and how that might affect the model.